Archetype UK’s statement on generative AI tools

At Archetype UK, we’re genuinely excited and optimistic about technology. Technology makes us better and faster: it continually solves real problems for individuals, businesses and societies. And it keeps evolving and surprising.

The new breed of generative AI technologies, the case in point, holds considerable promise for quickly gathering and combining information, brainstorming ideas, providing answers and automating workflows.

The results they can already produce are often astonishing and the potential capacity for time-savings is staggering.

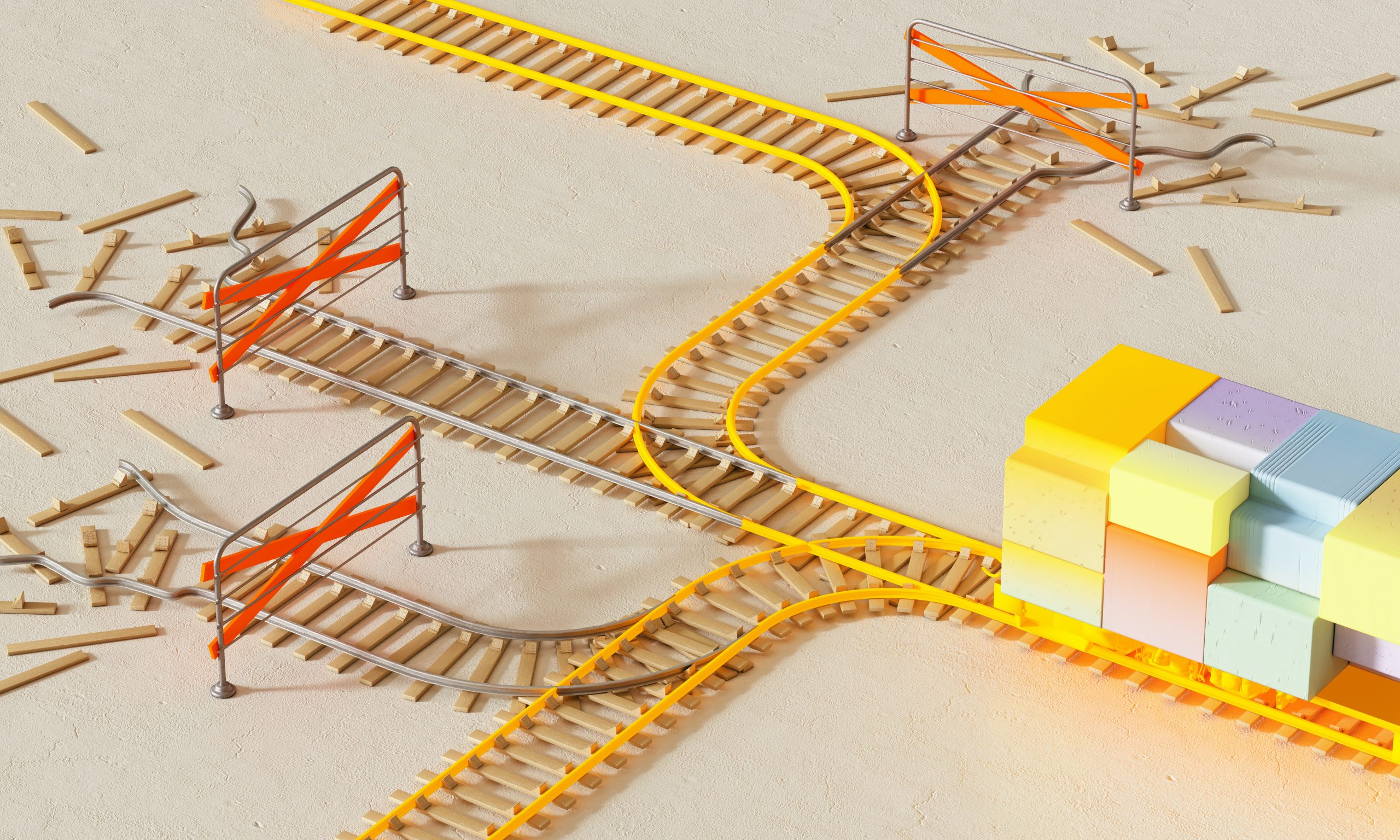

At other times, the results are deeply concerning. The output produced by current tools is very frequently incorrect, made-up, incoherent, biassed, plagiarised, inadequately and inappropriately sourced, as well as veering stylistically between the mundane and the inhuman.

This mixture of results – from amazing to appalling – means we want to publish our own take on the use of generative AI at Archetype UK, to inform and reassure anyone who works with us.

We will experiment internally with AI tools to find ways we can deliver better results, generate productivity gains and enhance our creativity. We’re also developing proficiency at selecting tools and crafting effective prompts during this crucial work to help ensure time-to-value is accelerated as the tools mature. Because the number of tools, and their potential use cases, is so vast, we’ll judge the results of these experiments, and whether we’ll consider applying them to client work, on a case-by-case basis.

We’ll exercise judgement. We will treat results created by AI tools in the same way we’d treat work created by an eager but entirely inexperienced intern. Their work might be totally unfit for purpose, need someone (a human) to start again from scratch, need some serious editing, or – who knows? – the tool might have got the results almost bang-on on its first try. We’ll assess all results with a critical eye every time they’re created to ensure they meet or exceed existing standards before they go anywhere.

We’ll share the value. When we are able to make productivity gains through AI tools, we’ll work with clients to ensure they reap the extra value that is created.

We’ll ask permission. Where we find workflows that will benefit our output for clients, we’ll ask if that’s OK, explaining the how and why, as well as the guard rails we’ll put in place to ensure our work is better with AI, not just faster. Our reputation and integrity are paramount, so we’ll be entirely transparent in this. We won’t take shortcuts that impair results or allow AI output into client work that doesn’t also reflect our talent, experience and judgement.

Your call. We won’t use generative AI tools if our clients don’t want us to – we humans at Archetype UK are a pretty good bunch and still have a lot to offer.

Privacy. We won’t put any confidential client information into AI tools. As has always been the case at Archetype, any information we can’t relay to the general public won’t go any further than those on the client team who need to know it. We take client confidentiality and Non-Disclosure Agreements extremely seriously, and won’t risk breaching our clients’ trust through AI tools.

Third parties. When we use freelancers or other third-party people for client work (e.g. film makers and other content creators), we’ll ensure they agree to the same generative AI-practice rules we’ve agreed for the rest of the agency.

We’ll protect creators. One further effect of the existence of generative AIs is their potential adverse impact on the livelihoods of creators. We believe creators are vital to a healthy society, so we’ll continue to employ, and champion, professional writers, designers, illustrators, musicians and filmmakers whenever we are able. We also won’t use AI tools to ape the content of any living creators.

We’re sure that the tools will improve: the progress since the public release of ChatGPT in November 2022 has been extremely rapid. That said, advances in extremely complex computer science problems aren’t predictable. We’re still nowhere near General AI, most relevantly, despite tools designed to create the impression we are much closer.

The technology is nonetheless evolving quickly and our position on AI at the agency, and its potential uses, will evolve in step. The next version of this policy may well be very different. If you’re as fascinated as we are about AI, get in touch.

We humans love to chat.

[Updated: 03/05/23]